In this blog, we will explore how to build container images using Docker in Docker (DinD), and compare it to other popular tools such as Kaniko, Buildah, and BuildKit in the cloud-native environment.

Introduction

By using a cloud-native approach, teams can build and deploy their applications more quickly and efficiently and can take advantage of the scalability, flexibility, and reliability of the cloud.

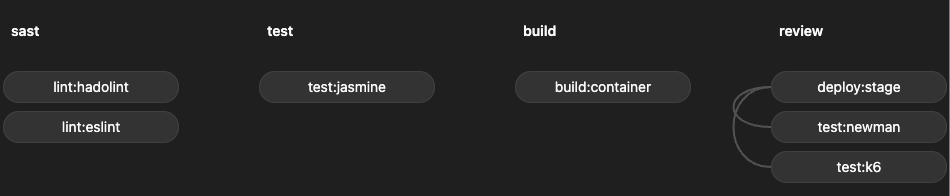

Some of the key characteristics of cloud-native applications include being deployed using containers that are built by continuous integration pipelines.

Container images are essential for using Kubernetes. A container image is a lightweight, standalone package that contains everything an application needs to run, including the code, libraries, dependencies, and runtime.

CI pipelines are essential for modern software development teams. By automating the process of building, testing, and deploying code, teams can save time and reduce the risk of errors.

Docker in Docker

Docker in Docker (DinD) is a technique for running Docker commands inside a Docker container. This allows you to use Docker inside a container, without having to install Docker on the host machine. This can be useful in certain situations, such as when running Docker in a CI/CD pipeline, or when running Docker in an environment where it is not possible or desirable to install Docker on the host, such as in a CI/CD pipeline or in a cloud-based environment.

However, there are some security concerns associated with using DinD, such as the potential for the Docker daemon inside the container to be compromised, or for a container to escape its isolation and gain access to the host’s resources. This is because Docker commands typically require root privileges in order to access the host’s resources and perform certain actions. To address these concerns, it is important to use DinD in a secure and controlled way and to implement appropriate security measures by using security tools such as SELinux or AppArmor to control access to the host’s resources.

Another potential downside of using DinD in a CI pipeline is that it can be difficult to set up and configure. Because DinD involves running a Docker daemon inside a container, it can be complex to configure and manage and may require additional steps and tools in order to work properly. In some cases, you may need to use a waiting mechanism to ensure that the Docker daemon is up and running before running Docker commands inside the DinD container. This may be necessary if the Docker daemon takes a long time to start. To implement a waiting mechanism, you can use a tool such as wait-for.

GitLab CI pipeline using Docker in Docker:

image: docker:20.10.16

variables:

# When using dind service, you must instruct Docker to talk with

# the daemon started inside of the service. The daemon is available

# with a network connection instead of the default

# /var/run/docker.sock socket.

DOCKER_HOST: tcp://docker:2376

# Specify to Docker where to create the certificates. Docker

# creates them automatically on boot, and creates

# `/certs/client` to share between the service and job

# container, thanks to volume mount from config.toml

DOCKER_TLS_CERTDIR: "/certs"

# These are usually specified by the entrypoint, however the

# Kubernetes executor doesn't run entrypoints

# https://gitlab.com/gitlab-org/gitlab-runner/-/issues/4125

DOCKER_TLS_VERIFY: 1

DOCKER_CERT_PATH: "$DOCKER_TLS_CERTDIR/client"

services:

- docker:20.10.16-dind

before_script:

- $ wget -qO- https://raw.githubusercontent.com/eficode/wait-for/v2.2.3/wait-for | sh -s -- docker:2376 -- echo "Docker Daemon is up"

build:

stage: build

script:

- docker build -t my-docker-image .Kaniko

https://github.com/GoogleContainerTools/Kaniko

Kaniko is a tool designed for building container images in a Kubernetes cluster without the need for a Docker daemon. It works by running a container with the necessary build tools and dependencies and then using the host machine’s filesystem to build the container image. This allows teams to build container images in a consistent and reliable way, even in environments where running a Docker daemon is not possible. It is optimized for use in CI pipeline making it a popular choice for teams that are looking to build container images in a cloud-native environment.

Some of the key advantages of Kaniko include:

One of the main advantages of Kaniko is that it does not require Docker daemon to be running in order to build container images which are inherently easier to set up and more secure by reducing the potential attack surface.

Another advantage of Kaniko is that it can be run in a non-privileged container. This can be a security benefit, as it can help to reduce the risk of the Kaniko container being compromised and used to gain access to the host machine.

Additionally, Kaniko supports offloading caching layers in a container registry, which is helpful in cloud-native pipelines where each job is ephemeral as there is no shared and persistent storage between individual runs. Kaniko also supports configuring the cache time-to-live (TTL) to control how long the cache is valid, which could be useful when you want to fetch fresh OS packages after some time during rebuild

Kaniko is available as a lightweight official container image, which makes it easy to pull and run in any environment that supports containers. Kaniko container image includes credential helpers for popular container registries, which makes it easy to authenticate and push images to the registry.

However, there are also some potential disadvantages of Kaniko that you should be aware of:

One of the main drawbacks of Kaniko is that it requires running in rootful mode. This means that the Kaniko container must be run with root privileges in order to access the host’s filesystem and build the container image. This can be a security concern, as it can potentially expose the host machine to security risks.

Another potential disadvantage of Kaniko is that it does not support building multi-architecture images. This means that Kaniko can only be used to build images for a single architecture, such as x86_64. This can be limiting for teams that need to build images for multiple architectures, such as x86_64, arm64, and armv7. You can workaround this by running the build on a node with desired architecture.

Additionally, Kaniko is only available as a Linux binary, which means that it cannot be used on other operating systems such as Windows or macOS. This can be limiting for teams that use these operating systems and want to use Kaniko to build container images locally and have a unified environment with a CI pipeline.

You could also encounter a few issues if you are not careful:

One of the main issues with Kaniko is that it did not follow symlinks when copying files into the container image in previous versions. This can result in incomplete or broken images and can cause issues when running the image. You should be good when using the latest versions of Kaniko.

Another issue with Kaniko is that it directly modifies the underlying filesystem in order to build the container image. This can cause problems with certain operating systems or package managers, such as alpine `apk` that in version 3.12 introduced change when running `apk upgrade` resulting in permission denied when purging /var/run directory which was read-only due to Kubernetes Pod Service Account token being mounted under this path.

Overall, Kaniko is a powerful tool for building container images in cloud-native CI/CD pipelines. Its efficiency and ease of use make it a popular choice for many teams, but it may not be the best fit for all environments and applications.

Buildah

Buildah is a tool for building and managing container images. It is designed to be fast, efficient, and lightweight, and can be used to build container images without running a Docker daemon. It is an open-source project that was first developed by Red Hat back in 2017 and is now maintained by the Open Container Initiative (OCI).

Buildah is also compliant with the Open Container Initiative (OCI) specification. The OCI is an open-source project that defines standards for building and running container images and is supported by major container runtime and orchestration tools such as Docker and Kubernetes. By complying with the OCI specification, Buildah can be used with other tools and technologies that support the OCI specification and can help to make container images more portable and interoperable.

In comparison with Kaniko, there are a few things that Buildah brings to the table:

One of the main advantages is the rootless mode which allows Buildah to build container images without root permissions. By running the build container as a non-root user, you can reduce the potential attack surface and reduce the risk of the container being compromised. This can help to protect the host machine from security breaches and can help to prevent unauthorized access to sensitive data or resources.

The second addition is the support for multi-platform builds. Buildah supports building multi-architecture images using QEMU. QEMU is a generic and open-source machine emulator and virtualizer that can run programs and processes for different architectures on the same machine. By using QEMU, Buildah can build container images for multiple architectures on a single machine.

However, using QEMU for multi-architecture builds in Buildah can have some performance drawbacks. Because QEMU is an emulator and not a native architecture, it can be slower and less efficient than building images for a single architecture on a native machine. This can cause Buildah to be slower and less efficient when building multi-architecture images, compared to building single-architecture images.

Looking at cache capabilities Buildah offers the same options like storing cache layers in a container registry and setting TTL for them.

Not all things are diamond-shaped:

On the other hand, its official container image is as lightweight as Kaniko has and does not include credential helpers so you will need to bundle them on your own.

The catch with Linux being the only supported platform is also true for Buildah.

When we were evaluating Buildah in our environment we encountered a few issues with its rootles mode when running inside an unprivileged container. It does require you to use a virtual file system (VFS) instead of an overlay which is significantly slower. The other thing is permission issues with mapping UID/GID from the userspace to the namespace where the build is executed. There are a bunch of GitHub issues related to this topic, but with no clear solution.

In general, the Buildah feature set is on par with Kaniko and offers only a few additions so switching to Buildah when you already using Kaniko might not be an obvious choice.

BuildKit

https://github.com/moby/BuildKit

BuildKit is a set of libraries and tools that are used to build Docker images. It was developed by Docker, Inc. as a way to improve the performance and scalability of their container image-building process. It was first introduced in 2017 as an experimental feature in Docker 17.05 and has since been integrated into the Docker engine as a core component. BuildKit is built on top of the OCI (Open Container Initiative) image specification and is able to build OCI-compliant images.

BuildKit is designed to be platform-agnostic and should be supported on all major platforms that support Docker. This includes Linux, macOS, and Windows. So as long as you have a recent version of Docker installed on your system, you should be able to use BuildKit to build container images. But to unlock its full potential you will need to install `buildx` plugin.

It supports a wide range of features, including build caching, multi-stage and multi-platform builds, and parallel processing.

To give an example of build caching features here are two very neat features that are exclusive to BuildKit:

Use the dedicated RUN cache

The RUN command supports a specialized cache, which you can use when you need a more fine-grained cache between runs. For example, when installing packages, you don’t always need to fetch all of your packages from the internet each time. You only need the ones that have changed. To solve this problem, you can use `RUN –mount type=cache`. For example, for your Debian-based image you might use the following:

RUN \

--mount=type=cache,target=/var/cache/apt \

apt-get update && apt-get install -y git

COPY --link

Enabling this flag in COPY or ADD commands allows you to copy files with enhanced semantics where your files remain independent on their own layer and don’t get invalidated when commands on previous layers are changed. Use `–link` to reuse already built layers in subsequent builds with `–cache-from` even if the previous layers have changed. This is especially important for multi-stage builds where a `COPY –from` statement would previously get invalidated if any previous commands in the same stage changed, causing the need to rebuild the intermediate stages again. With `–link` the layer the previous build generated is reused and merged on top of the new layers. This also means you can easily rebase your images when the base images receive updates, without having to execute the whole build again. In backends that support it, BuildKit can do this rebase action without the need to push or pull any layers between the client and the registry. BuildKit will detect this case and only create a new image manifest that contains the new layers and old layers in the correct order.

From our observations `COPY –link` addition is a real game changer in terms of build performance.

To highlight other useful features, you can:

- Specify multiple output registries and tags with the –output build option so you can push the image into multiple destinations at once without a need to re-tag the image

- Offload cache layers to blob storage, i.e S3, Azure Blob, which will be cheaper in general, but it is in the experimental phase.

When looking at potential drawbacks, you should bear in mind:

BuildKit is using a daemon to build the image. We already stated possible issues with this approach. To alleviate some, the daemon could be run in a rootless mode. But it will incur an additional configuration burden as some security tweaks are needed when running in a non-privileged container:

- You will need to add `–oci-worker-no-process-sandbox` flag to the BuildKit daemon so it can terminate other processes running within the same container as the daemon.

- If you are using AppArmor/seccomp kernel security features in your environment you will need to set profiles to `unconfined`.

On the cache side, if you would like to export cache layers and manifest into a container registry, you won’t be able to use AWS ECR for that purpose as the standard BuildKit adopted for the cache manifests is not supported by the ECR. See https://github.com/aws/containers-roadmap/issues/876 for more details.

Also, there is no TTL mechanism for the cache layers.

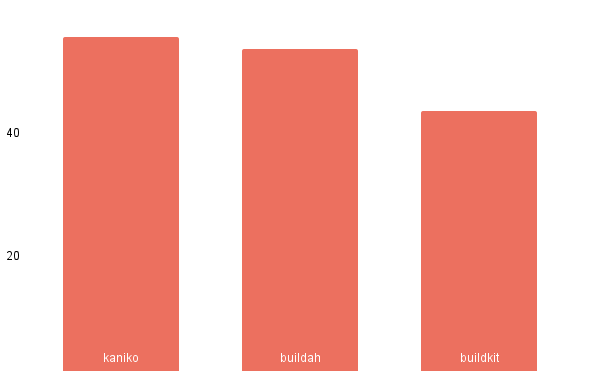

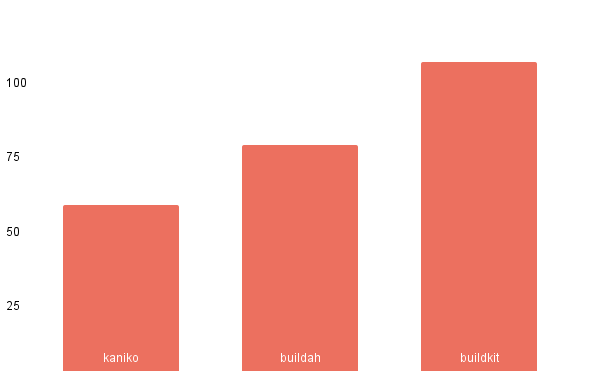

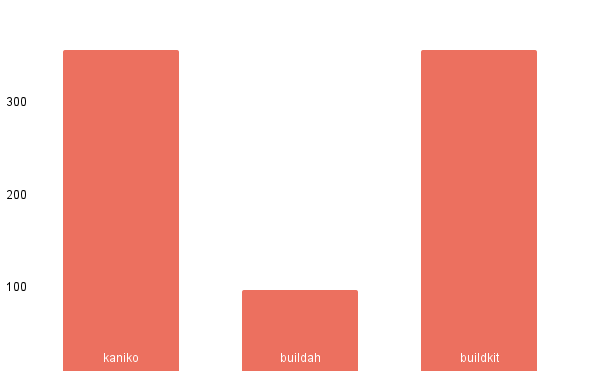

Measurements

In the strive to find out the best fitting tool that will match our approach to building container images and environments we also did some measurements focusing on build time, CPU, and memory utilization.

We tested container image build tooling in an isolated environment with a dedicated node without any cache involved. Here are the results:

Conclusion

All three of these tools are widely used and well-regarded, so it’s difficult to say which one is “best” without knowing more about your specific needs and use case. Each tool has its own strengths and weaknesses, so it’s important to choose the one that best fits your needs.

“We have chosen BuildKit”

2https://docs.docker.com/build/building/cache

3https://docs.docker.com/engine/reference/builder/

![[Part 1] Karpenter: Kubernetes Autoscaling with Performance and Efficiency](https://cdn.prod.website-files.com/66ba18ab4a29ff8fa3193c1a/6705223aacee2f2eba31eae5_Karpenter%201.avif)